SiFive Blog

The latest insights, and deeper technology dives, from RISC-V leaders

Enabling AI Innovation at The Far Edge

SiFive’s X100-Series, Part of the Second Generation SiFive Intelligence Family

Right now, there is a massive focus on “up and to the right” hardware technologies that can push AI performance levels to new heights for data centers. During the HotChips 2025 conference, the thirst for improved performance in hyperscalers seemed to drive the vast majority of the agenda, as the big, powerful chips stole the spotlight.

Yet, away from the performance headliners and press articles about the cloud industry, there is a vast opportunity for tinyML at the far edge. This is a diverse collection of sensor-rich endpoints that collect, pre-process, and analyze real-world data prior to submitting metadata to the cloud. This graph forecasts the fast-growing AI workloads that will be run in edge, mobile, PC, and IOT systems markets.

The reason behind this growth is simple: not all AI tasks can or should be done in the cloud or a large datacenter. Massive opportunities exist where there is inadequate internet access, need for real-time responsiveness, and a need for privacy that prevents cloud-based solutions that have network dependencies, data latencies, and security/privacy concerns. Even if cloud-based solutions are used, the ability to do AI locally reduces data movement costs and power consumption and is more sustainable. While other IP suppliers have “paused” their product developments for this set of use cases, SiFive is doubling down and investing heavily in broadening its offering for the diverse set of use cases at the far edge and complementing those processors with reference AI software stacks. The focus here is on enabling our customers to create and deploy leadership products for their areas of focus in the most cost- and time-effective way.

What does it take to bring efficient AI to the edge?

With the pace of innovation of AI models (with convolutional neural networks being replaced with transformer models, and some industry experts already predicting the death of those), building a flexible architecture that can adapt is key. To enable AI everywhere, the companies developing products for edge use cases are focused on improving latency, optimizing usage of bandwidth, and enhancing data security. These systems are typically faced with intensive resource constraints, including power, cost, and size. Many vertical markets have additional unique needs: in healthcare, complying with data privacy laws is paramount; in industrial and manufacturing sites, real-time responsiveness is crucial; in automotive, connectivity is not guaranteed.

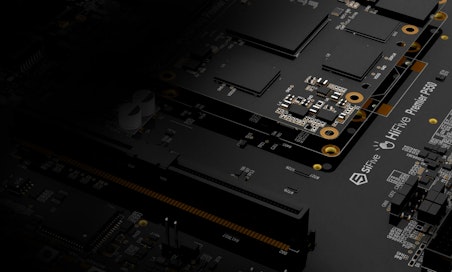

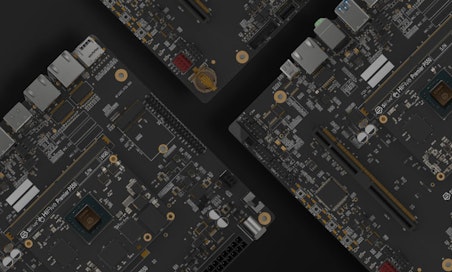

The industry uses a wide range of names for this broad set of applications, with one of the more recent ones being “Physical AI”. Whatever market categorization is used, at SiFive, we embrace Local AI and are showing why harnessing open-standard RISC-V as the foundation of these systems is the best solution for these applications - with advantages in power, size and common software. Starting with just one product in 2021, the first generation of our Intelligence product family has become very popular, with over 40 design wins across a diverse set of IoT applications. To meet increasing and broader market demand, the 2nd generation Intelligence products launched officially today. The combination of dedicated scalar and vector pipelines in our new Intelligence products provides our customers choice, scalability and an opportunity to innovate in the creation of accelerated compute solutions. The smallest of these, the new X100-series were designed based on direct feedback from customers about the need for more efficient AI inference at the deeply embedded edge.

Our products do not just operate as standalone processors but can be paired with customer accelerators. While specialized accelerators can deliver great performance and efficiency, the accelerator function is fixed and can be limited. As SiFive co-founder Krste, mentions in his product launch video, one of our key advantages with the Second Generation Intelligence family, is to enable the creation of ‘intelligent accelerators’, combining powerful fixed function accelerators with a programmable front end. This is accomplished by incorporating two direct-to-core interfaces specifically designed for data movement and control functions.

Another aspect of AI is the datatype support. With all second gen Intelligence products, we now have support for Bfloat16; originally developed by Google Brain, Bfloat16 was designed specifically for the needs of neural network training and inference. Importantly, this data type allows AI inference to achieve a level of accuracy comparable to the standard 32-bit format while reducing memory usage and speeding up computations. This is an example of a function that has only recently started being deployed at scale and, therefore, isn’t supported on many legacy products. While I focus here on the capabilities in the Second Generation of the Intelligence Family, you can imagine that SiFive is actively tracking other industry standards and proposals about data types and engaging with customers to determine the future direction of this product family.

There are two members of the X100 product series at this point, the X160 and X180. The latter is a 64-bit processor. One piece of early customer feedback was that it would typlically be paired with a 64-bit host processor (typically running Linux). Use of the X180 simplifies the memory map and sharing of information between the cores.

So, what does all this mean for vertical markets that are developing and adopting Local AI solutions? Dedicated vector processing in small form factors means AI workloads can be run faster, and with less power, ultimately enabling more diverse solutions that deliver real-time responses with enhanced privacy, without requiring network connectivity. Having the option of a single scalable architecture that can span the breadth of edge AI applications makes product development and software programming much simpler. Product roadmaps can be future-proofed for the rapidly changing AI model landscape as customized instructions can be added. Instead of being restricted to limited processor options and siloed software stacks, the RISC-V open standard means your product engineers can think big about tinyML.

The opportunity is so great, and the advantages so compelling that, even before being launched, the products have already been licensed by two top tier US semiconductor manufacturers to build into their future edge AI SoCs. You can read more on these case studies here, and you can read more details about the X100 product series.

If you want to discuss how SiFive products can be part of your company’s AI journey, please contact us.

Ian Ferguson, VP Vertical Markets at SiFive